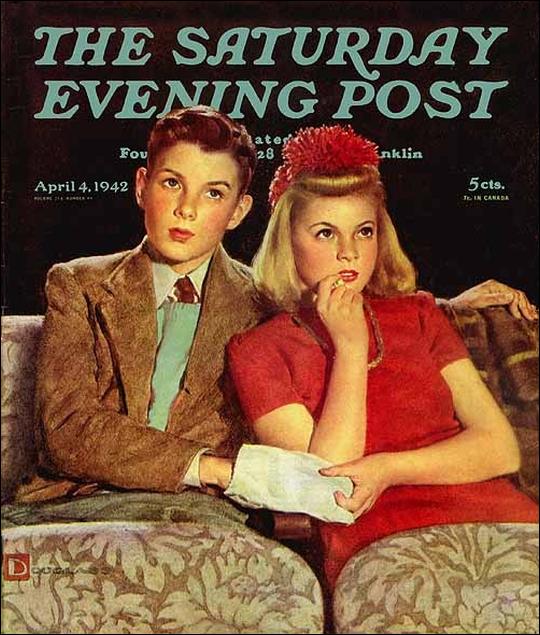

Popular Culture and The Rise of Hollywood

The rise of Hollywood signaled the arrival of America’s urban-industrial age, a period when traditional values and established notions of family and community, of the social and political order, and of individual freedom and initiative were radically transformed. Hollywood movies were among the first and were certainly the most widespread and accessible manifestations of an emergent “mass culture” which brought with it new forms of cultural expression.

Popular Culture and The Rise of Hollywood Read More